Instagram today is rolling out a set of new features aimed at helping people protect their accounts from abuse, including offensive and unwanted comments and messages. The company will introduce tools for filtering abusive direct message (DM) requests as well as a way for users to limit other people from posting comments or sending DMs during spikes of increased attention — like when going viral. In addition, those who attempt to harass others on the service will also see stronger warnings against doing so, which detail the potential consequences.

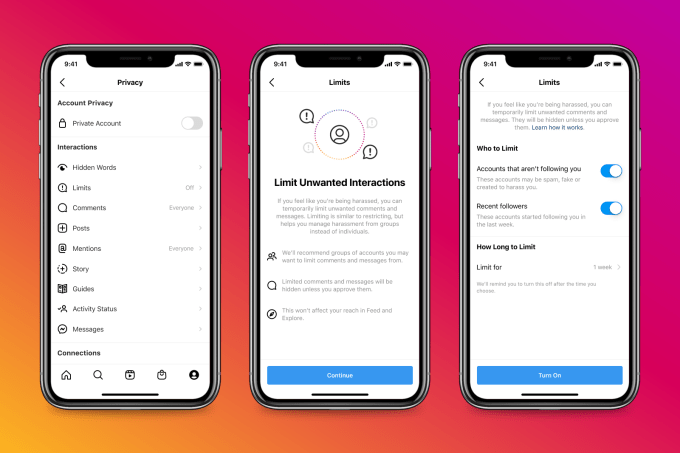

The company recently confirmed it was testing the new anti-harassment tool, Limits, which Instagram head Adam Mosseri referenced in a video update shared with the Instagram community last month. The feature aims to give Instagram users an easy way to temporarily lock down their accounts when they’re targeted with a flood of harassment.

Such an addition could have been useful to combat the recent racist attacks that took place on Instagram following the Euro 2020 final, which saw several England footballers viciously harassed by angry fans after the team’s defeat. The incidents, which had included racist comments and emoji, raised awareness of how little Instagram users could do to protect themselves when they’ve gone viral in a negative way.

Image Credits: Instagram

During these sudden spikes of attention, Instagram users see an influx of unwanted comments and DM requests from people they don’t know. The Limits feature allows users to choose who can interact with you during these busy times.

From Instagram’s privacy settings, you’ll be able to toggle on limits that restrict accounts that are not following you as well as those belonging to recent followers. When limits are enabled, these accounts can’t post comments or send DM requests for a period of time of your choosing, like a certain number of days or even weeks.

Twitter had been eyeing a similar set of tools for users who go viral, but has yet to put them into action.

Instagram’s Limits feature had already been in testing, but is now becoming globally available.

Twitter is eyeing new anti-abuse tools to give users more control over mentions

The company says it’s currently experimenting with using machine learning to detect a spike in comments and DMs in order to prompt people to turn on Limits with a notification in the Instagram app.

Another feature, Hidden Words, is also being expanded.

Designed to protect users from abusive DM requests, Hidden Words automatically filters requests that contain offensive words, phrases and emojis and places them into a Hidden Folder, which you can choose to never view. It also filters out requests that are likely spam or are otherwise low-quality. Instagram doesn’t provide a list of which words it blocks to prevent people from gaming the system, but it has now updated that database with new types of offensive language, including strings of emoji — like those that were used to abuse the footballers — and included them in the filter.

Hidden Words had already been rolled out to a handful of countries earlier this year, but will reach all Instagram users globally by the end of the month. Instagram will push accounts with a larger following to use it, with messages both in their DM inbox and in their Stories tray.

Instagram confirms test of new anti-harassment tool, Limits, designed for moments of crisis

The feature was also expanded with a new option to “Hide More Comments,” which would allow users to easily hide comments that are potentially harmful, but don’t go against Instagram’s rules.

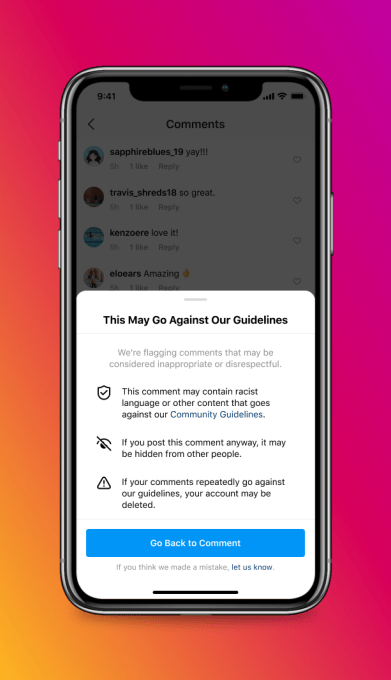

Another change will involve the warnings that are displayed when someone posts a potentially abusive comment. Already, Instagram would warn users when they first try to post a comment, and it would later display an even stronger warning when they tried to post potentially offensive comments multiple times. Now, the company says users will see the stronger message the first time around.

Image Credits: Instagram

The message clearly states the comment may “contain racist language” or other content that goes against its guidelines, and reminds users that the comment may be hidden when it’s posted as a result. It also warns the user if they continue to break the community guidelines, their account “may be deleted.”

While systems to counteract online abuse are necessary and underdeveloped, there’s also the potential for such tools to be misused to silence dissent. For example, if a creator was spreading misinformation or conspiracies, or had people calling them out in the comments, they could turn to anti-abuse tools to hide the negative interactions. This would allow the creator to paint an inaccurate picture of their account as one that was popular and well-liked. And that, in turn, can be leveraged into marketing power and brand deals.

As Instagram puts more power into creators’ hands to handle online abuse, it has to weigh the potential impacts those tools have on the overall creator economy, too.

“We hope these new features will better protect people from seeing abusive content, whether it’s racist, sexist, homophobic or any other type of abuse,” noted Mosseri, in an announcement about the changes. “We know there’s more to do, including improving our systems to find and remove abusive content more quickly, and holding those who post it accountable.”